DEEPFAKES FOR GOOD:

The Prosocial Potential of Synthetic Media

Deepfakes are a terrifying development of our post-truth era. If we’re already in a dark place with misinformation, one can only imagine the darker turns things can take.

And yet..…what if deepfakes could be used in a radically different way? What if synthetic media enabled us to elicit the change we wish to see?

I’m an independent artist and the producer of Deep Reckonings, a series of explicitly-marked deepfake videos that imagine morally courageous versions of Mark Zuckerberg, Brett Kavanaugh, and other public figures. In this artist statement, I attempt to elaborate the potential of 'synthetic media for good' — or what I refer to as prosocial synthetic media — in order to better leverage synthetic media for socially beneficial purposes. I also explore the implications of synthetic media for our post-truth era, and open some philosophical doors into the nature of truth and reality. This statement is dedicated to the individuals and organizations working to alleviate the threats of synthetic media, and in particular: Henry Ajder, Aviv Ovadya, Sam Gregory at WITNESS, Shamir Allibhai of Amber, and Mike Pappas and Rupal Patel of the AITHOS Coalition.

And yet..…what if deepfakes could be used in a radically different way? What if synthetic media enabled us to elicit the change we wish to see?

I’m an independent artist and the producer of Deep Reckonings, a series of explicitly-marked deepfake videos that imagine morally courageous versions of Mark Zuckerberg, Brett Kavanaugh, and other public figures. In this artist statement, I attempt to elaborate the potential of 'synthetic media for good' — or what I refer to as prosocial synthetic media — in order to better leverage synthetic media for socially beneficial purposes. I also explore the implications of synthetic media for our post-truth era, and open some philosophical doors into the nature of truth and reality. This statement is dedicated to the individuals and organizations working to alleviate the threats of synthetic media, and in particular: Henry Ajder, Aviv Ovadya, Sam Gregory at WITNESS, Shamir Allibhai of Amber, and Mike Pappas and Rupal Patel of the AITHOS Coalition.

THE LANDSCAPE

WHAT ARE DEEPFAKES AND HOW ARE THEY USED FOR GOOD AND ILL?

AI-generated synthetic media are media that have been manipulated with artificial intelligence. Deepfakes are a type of synthetic media that have been manipulated with the AI technique of deep learning — hence the term is a combination of deep learning and fake. Shallowfakes and cheapfakes use basic editing techniques like changing speed or removing frames, whereas deepfakes use deep learning to generate entirely new visual content. An example of a shallowfake is the video of House Speaker Nancy Pelosi, which was slowed down to make her appear drunk; an example of a deepfake is Spectre's video of Facebook CEO Mark Zuckerberg, which was manipulated to make him give a sinister speech about his power. Although there's an inverse relationship between realism and accessibility, it's getting easier, cheaper, and faster to make it sound and look like people are saying and doing things they never said or did.

Deepfakes can be comical, like the sub-genre of swapping Nicholas Cage's face into movies he never acted in. They can also be used for commercial purposes, for example to dub movies more persuasively and make synthetic voices for video games. Most often they're nefarious, created to demean public figures and disinform viewers. According to a 2019 report by synthetic media tracking firm Sensity, a startling 96% of deepfakes online consist of involuntary pornography — which swaps people's faces into pornographic films they never acted in, usually that of a female entertainer like Scarlett Johansson or Daisy Ridley.

AI-generated synthetic media are media that have been manipulated with artificial intelligence. Deepfakes are a type of synthetic media that have been manipulated with the AI technique of deep learning — hence the term is a combination of deep learning and fake. Shallowfakes and cheapfakes use basic editing techniques like changing speed or removing frames, whereas deepfakes use deep learning to generate entirely new visual content. An example of a shallowfake is the video of House Speaker Nancy Pelosi, which was slowed down to make her appear drunk; an example of a deepfake is Spectre's video of Facebook CEO Mark Zuckerberg, which was manipulated to make him give a sinister speech about his power. Although there's an inverse relationship between realism and accessibility, it's getting easier, cheaper, and faster to make it sound and look like people are saying and doing things they never said or did.

Deepfakes can be comical, like the sub-genre of swapping Nicholas Cage's face into movies he never acted in. They can also be used for commercial purposes, for example to dub movies more persuasively and make synthetic voices for video games. Most often they're nefarious, created to demean public figures and disinform viewers. According to a 2019 report by synthetic media tracking firm Sensity, a startling 96% of deepfakes online consist of involuntary pornography — which swaps people's faces into pornographic films they never acted in, usually that of a female entertainer like Scarlett Johansson or Daisy Ridley.

Amid the sea of nefarious deepfakery, there's a small but growing canon of prosocial synthetic media. A collaboration between the ALS Association and Canadian startup Lyrebird, Project Revoice creates personalized synthetic voices for ALS sufferers who've lost their ability to speak. MIT-based project In Event Of Moon Disaster features a fake Richard Nixon delivering the contingency speech he would have given if Apollo 11 had failed, and serves to warns viewers that synthetic media can be used not only to rewrite the present but also the past.

How might we conceptually organize this budding landscape of prosocial synthetic media? Given the extraordinary power of a medium that can make it look like anyone is saying or doing anything, where might we find untapped potential for using synthetic media in prosocial ways?

How might we conceptually organize this budding landscape of prosocial synthetic media? Given the extraordinary power of a medium that can make it look like anyone is saying or doing anything, where might we find untapped potential for using synthetic media in prosocial ways?

A TYPOLOGY

HOW MIGHT WE CATEGORIZE PROSOCIAL SYNTHETIC MEDIA?

As a starting point for exploring the prosocial potential of synthetic media, what does it mean to be prosocial?

As a baseline, prosocial synthetic media are produced responsibly. While there’s currently no formal alignment on what it means to produce synthetic media in a responsible way, but there are widely agreed-upon considerations that factor into the extent to which a synthetic media piece is considered responsibly-produced. Such factors include: 1) the piece’s potential for deception, which is a matter not just of intending to deceive but also of failing to sufficiently prevent deception; 2) whether a piece obtained consent from its protagonist, which isn't legally required (synthetic media fall under the categories of satire and commentary) but is still ethically meaningful; 3) the intent of the piece, which is subjective but also ethically meaningful; and 4) the piece's potential for normalizing problematic behavior, like non-consensual pornography. The relative 'weight' of these considerations also depends on whether a synthetic media piece is produced for public or private consumption, and commercial or non-commercial use.

I believe the most critical consideration in determining the extent to which a synthetic media piece is responsibly-produced is its potential for deception. I think the other considerations can be treated on more of a case-by-case basis. For example, I embrace the way human rights non-profit WITNESS approaches consent: public figures should have some baseline ownership of their likeness, but in general, the more public or powerful someone is, the less critical consent is. (For more on how to produce synthetic media responsibly, see the guidelines put forth by the AITHOS Coalition and Aviv Ovadya and Jess Whittlestone. For a discussion of how Deep Reckonings strove for responsibility, see the FAQ.)

Beyond being responsibly-produced, prosocial synthetic media fulfill a positive social purpose. Here, we’d want to distinguish between commercial and social utility. Again, there's no formal alignment on a distinction between commercial and social utility (and there’s meaningful overlap within the context of shared value), but for purposes of this discussion, we can loosely differentiate between synthetic media whose primary purpose is to serve commercial ends, like the dubbing of a blockbuster movie that serves the studio and consumers, versus social ends, like the dubbing of David Beckham's voice for a multi-lingual malaria PSA that benefits societies where malaria is endemic.

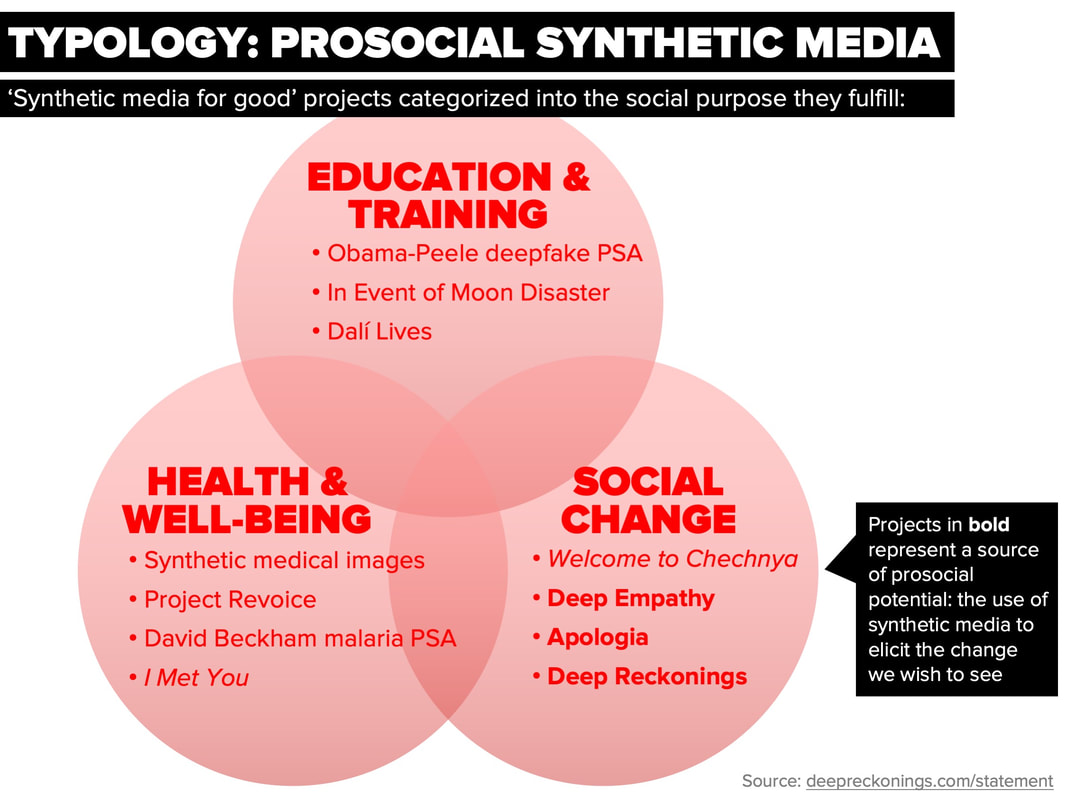

With a clearer sense of the prosocial landscape we're talking about — synthetic media that are responsibly-produced and fulfill a social purpose — I’d like to propose a preliminary typology of prosocial synthetic media. Roughly, we can place prosocial synthetic media into three categories based on the primary social purpose they fulfill: Education & Training, Health & Well-Being, and Social Change.

As a starting point for exploring the prosocial potential of synthetic media, what does it mean to be prosocial?

As a baseline, prosocial synthetic media are produced responsibly. While there’s currently no formal alignment on what it means to produce synthetic media in a responsible way, but there are widely agreed-upon considerations that factor into the extent to which a synthetic media piece is considered responsibly-produced. Such factors include: 1) the piece’s potential for deception, which is a matter not just of intending to deceive but also of failing to sufficiently prevent deception; 2) whether a piece obtained consent from its protagonist, which isn't legally required (synthetic media fall under the categories of satire and commentary) but is still ethically meaningful; 3) the intent of the piece, which is subjective but also ethically meaningful; and 4) the piece's potential for normalizing problematic behavior, like non-consensual pornography. The relative 'weight' of these considerations also depends on whether a synthetic media piece is produced for public or private consumption, and commercial or non-commercial use.

I believe the most critical consideration in determining the extent to which a synthetic media piece is responsibly-produced is its potential for deception. I think the other considerations can be treated on more of a case-by-case basis. For example, I embrace the way human rights non-profit WITNESS approaches consent: public figures should have some baseline ownership of their likeness, but in general, the more public or powerful someone is, the less critical consent is. (For more on how to produce synthetic media responsibly, see the guidelines put forth by the AITHOS Coalition and Aviv Ovadya and Jess Whittlestone. For a discussion of how Deep Reckonings strove for responsibility, see the FAQ.)

Beyond being responsibly-produced, prosocial synthetic media fulfill a positive social purpose. Here, we’d want to distinguish between commercial and social utility. Again, there's no formal alignment on a distinction between commercial and social utility (and there’s meaningful overlap within the context of shared value), but for purposes of this discussion, we can loosely differentiate between synthetic media whose primary purpose is to serve commercial ends, like the dubbing of a blockbuster movie that serves the studio and consumers, versus social ends, like the dubbing of David Beckham's voice for a multi-lingual malaria PSA that benefits societies where malaria is endemic.

With a clearer sense of the prosocial landscape we're talking about — synthetic media that are responsibly-produced and fulfill a social purpose — I’d like to propose a preliminary typology of prosocial synthetic media. Roughly, we can place prosocial synthetic media into three categories based on the primary social purpose they fulfill: Education & Training, Health & Well-Being, and Social Change.

1. Education & Training — in this category, we find deepfakes that warn viewers about the dangers of deepfakes, like BuzzFeed’s Obama-Peele deepfake PSA, which features a fake President Obama voiced by comedian Jordan Peele giving a public service announcement about deepfakes, and In Event of Moon Disaster, which includes a deepfake that shows how deepfakes can be used to rewrite the past and a robust website that educates viewers about synthetic media. This category also includes synthetic media pieces that educate the public more broadly, like Dalí Lives, a collaboration between Florida’s Dalí Museum and ad agency Goodby, Silverstein & Partners to create a life-size deepfake of Dalí that teaches museum visitors about his life. I can also envision synthetic videos used for socially-oriented training purposes, for example of obstetricians- or midwives-in-training performing challenging maneuvers while they're in the process of learning them, so that they can witness themselves successfully perform the maneuvers they're trying to learn.

2. Health & Well-Being — a second category of prosocial synthetic media are those used for healing purposes. In the physiological domain, health researchers are using deepfakes to create synthetic images of different disorders, like MRI's of brain tumors and images of skin lesions, in order to train their algorithms to better identify those disorders. Project Revoice creates synthetic voices for ALS sufferers, and David Beckham’s malaria PSA seamlessly translates his voice into eight malaria-relevant languages. In the context of psychological well-being, South Korean documentary I Met You chronicles the story of a grief-stricken mother who reunites with her deceased daughter in virtual reality, presenting the potential of synthetic media for bereavement therapy. However, as Henry Ajder notes, this use case falls into the morally-ambiguous realm of 'greyfakes,' given the need for research and safeguards to ensure that ‘synthetic reunions’ help rather than stunt people's recovery from loss.

3. Social Change — third, we encounter deepfakes that are used for purposes of social and political change. In Welcome to Chechnya, a documentary about the persecution of LGBTQ-identifying people in Chechnya, synthetic video is used to replace the faces of the protagonists, and therefore protect the identities. Synthetic media are also used in-themselves as an advocacy tool, as with Deep Empathy, a collaboration between MIT and UNICEF to generate synthetic images of what London, Tokyo, and other major cities would look like if they were bombed, in order to build empathy for war refugees. Alethea AI's Apologia is a series of synthetic videos that feature world leaders apologizing for climate change in the future, and encourage the public to hold leaders accountable today. And Deep Reckonings, my own project, is a series of synthetic videos that feature public figures taking responsibility for their actions, in order to make more room for growth in public.

|

This typology isn't mutually exclusive (hence the Venn diagram) or collectively exhaustive — Beckham’s malaria PSA could also be considered educational, and there may be prosocial deepfakes that don't fit into any of these categories. Still, thinking in terms of education, healing, and social change can provide a useful starting point for organizing the landscape of prosocial synthetic media and discerning where untapped potential might be.

THE POTENTIAL

WHERE IS THERE POTENTIAL FOR PROSOCIAL SYNTHETIC MEDIA? HOW DOES THAT CONNECT WITH POST-TRUTH?

|

Where I see the greatest untapped potential for prosocial synthetic media is in a capacity that emerges across all three categories: to envision and elicit the change we wish to see. We see this capacity in the possibility of using synthetic media to envision more skilled obstetricians and midwives, more sober versions of ourselves, and more morally courageous versions of our public figures. This capacity distinguishes Spectre’s portrayal of Mark Zuckerberg from that of Deep Reckonings — Spectre leverages satire and social commentary, whereas Deep Reckonings envisions 'the Zuck we wish to see.'

|

A superpower of synthetic media is that we can know they’re fake, and they still affect us. |

This capacity also leverages what I consider to be a superpower of synthetic media — that we can know they’re fake and they still affect us. We can know that a synthetic video of our future sober self is fake and still have it encourage us into recovery, or that a synthetic video of Brett Kavanaugh is fake and still have it move us to advocate for gender equality in a more compassionate way. We don’t have to sacrifice responsible production in order to leverage this source of prosocial potential: we make deepfakery explicit as part of envisioning more skilled, healed, courageous, and otherwise better versions of ourselves and our world.

The greatest untapped potential of synthetic media may be enabling us to elicit the change we wish to see...or more simply, to deepfake it ‘til we make it. |

In her book The Apology, V (formerly Eve Ensler) imagines the apology her father never gave her for sexually abusing her as a child. The Sunday Times calls The Apology "a bold act of imaginative empathy" (emphasis added) — which is precisely what Deep Reckonings seeks to extend with the medium of synthetic video. Similarly, in my conversations with him, Dr. Miller connected the potential of synthetic media to envision healed versions of ourselves to existing therapeutic models, such as self-as-model, self-perception theory, and counter-attitudinal role-play. He recognized that this use of synthetic media could extend the application of these models. Meaning: the capacity of synthetic media to envision our desired reality simply extends an existing practice with new technology. And in some circumstances, we may actually need to envision the change in order to elicit it. Or more simply, sometimes we may need to deepfake it ‘til we make it.

|

Ultimately, we create the stories that create us. We can create stories in ways that are mindless or nefarious, spinning hateful conspiracy theories that exacerbate public health crises or motivate mass shootings. We can also create stories mindfully and pro-socially: virtual reality therapy has been used to heal disorders ranging from PTSD to chronic pain, and psychodrama uses fantasy-based role-play to prepare participants for hypothetical future scenarios. It's said that the most underutilized tool in all of medicine is the placebo effect — the effect of believing we're being treated, irrespective of whether that's actually true. Speaking on Rebel Wisdom, psychiatrist and scholar Iain McGilchrist says "it's not that we create reality, and it's not that reality independently exists from us. We midwife reality into being" (emphasis added). By crafting stories that heal us, prepare us for the future, and otherwise materially affect our reality, we effectively co-create reality. And with synthetic media, we're gaining more power to co-create our reality. Instead of (or along with) being at the mercy of our stories, we're learning to craft synthetic narratives and experiences that 'midwife' our desired reality into being. Which is why the next step for Deep Reckonings is to make it real.

In this light, the implications of synthetic media for our post-truth crisis look different. On one hand, nefarious deepfakes threaten our ability to know what’s true and maintain a shared sense of reality. On the other hand, prosocial synthetic media can help us build a more purposeful relationship with truth and reality, which I'd humbly propose is necessary for transcending our post-truth crisis. Echoing Deep Reckonings' imaginary Alex Jones, "It's not about believing that everything is fake, or everything is real, or even somewhere in between. It's about believing what’s actually true, but not stopping there! We can't just ‘report the facts’ like the lamestream media. All journalism should report the facts — it's about: whose facts? Told how? For what purpose?" The question isn't just is this true? but also what purpose does this truth serve? And sometimes, a given purpose is best served by non-truth — not in the sense of lies, but in the sense of purposeful fictions that enable us to co-create our desired reality. This reframe can act as an antidote to the "liar's dividend" — the phenomenon whereby the more fake content there is, the more liars can call "fake news" on non-fake content. Armed with an understanding that truth can be partial and reality can be co-created, we're less vulnerable to the claim that something isn't the capital "T" Truth.

In this light, the implications of synthetic media for our post-truth crisis look different. On one hand, nefarious deepfakes threaten our ability to know what’s true and maintain a shared sense of reality. On the other hand, prosocial synthetic media can help us build a more purposeful relationship with truth and reality, which I'd humbly propose is necessary for transcending our post-truth crisis. Echoing Deep Reckonings' imaginary Alex Jones, "It's not about believing that everything is fake, or everything is real, or even somewhere in between. It's about believing what’s actually true, but not stopping there! We can't just ‘report the facts’ like the lamestream media. All journalism should report the facts — it's about: whose facts? Told how? For what purpose?" The question isn't just is this true? but also what purpose does this truth serve? And sometimes, a given purpose is best served by non-truth — not in the sense of lies, but in the sense of purposeful fictions that enable us to co-create our desired reality. This reframe can act as an antidote to the "liar's dividend" — the phenomenon whereby the more fake content there is, the more liars can call "fake news" on non-fake content. Armed with an understanding that truth can be partial and reality can be co-created, we're less vulnerable to the claim that something isn't the capital "T" Truth.

What if thinking that Deep Reckonings was real was more likely to result in the real reckonings of its protagonists? |

In this respect, truth is not just an end in itself, but also a means to an end — namely, a means to the end of making sense of and co-creating reality. Which raises a provocative possibility: what if thinking Deep Reckonings was real was more likely to result in the real reckonings of its protagonists? Echoing the imaginary Joe Rogan in Alex Jones’ Deep Reckonings video, what if Jones pretended his video was real in order to help him stop broadcasting lies and ultimately....change? Or what if Jones acknowledged his video might be real or fake, but wouldn't say which, more akin to a placebo effect? Would that be inherently problematic because he wouldn't be telling the truth, or could it be conceived of as an act of co-creating reality? Put simply: under what circumstances do we prioritize truth versus other values, like social progress? This may seem like a blasphemous question to ask at a time when truth is under attack, but I'd argue the contrary — now is precisely the moment to wrestle with the value of truth, so that we can defend it.

|

Returning to the point at hand: the notion that evolving our relationship with truth and reality is necessary for transcending our post-truth crisis is an enormous statement. If you're intrigued, watch this video essay and let's talk :) For now, I'll conclude with the bold suggestion that: while deceptive deepfakes exacerbate our post-truth crisis, prosocial synthetic media may actually help us transcend it — by enabling us to elicit the change we wish to see, and in turn, to create a more rightful place for purposeful fiction.

AN INVITATION

LET'S LEVERAGE THE POWER OF SYNTHETIC MEDIA FOR PROSOCIAL PURPOSES

Given the threats posed by synthetic media, is it ever worth releasing a deepfake into the wild? But it's neither that deepfakes are inherently good or bad (technological determinism), nor that they're neutral and entirely dependent on what we do with them (social determinism). As philosopher of technology Langdon Winner articulates, most technologies hold potential for good and harm, and therefore require thoughtful stewardship.

To help us steward synthetic media towards prosocial ends, this artist statement attempts to conceptually organize the landscape of prosocial synthetic media — categorized into whether they primarily fulfill the social purpose of education, healing, or social change. This statement also highlights a ripe source of prosocial potential — for using synthetic media to envision and elicit the change we wish to see — and introduces the possibility that prosocial synthetic media might help alleviate our post-truth crisis. Finally, this statement is an invitation to members of the synthetic media community: to think about how you might organize the landscape, identify where you see untapped prosocial potential, and be ambitious about leveraging the power of synthetic media for prosocial purposes.

In his book The Road Home, Buddhist teacher Ethan Nichtern describes the meditative practice of visualization as creating a "mindfully creative space, a kind of movie studio that...benefits sentient beings." He notes that "all visualizations end by dissolving imagination and returning to naked awareness" because "the point of visualization is to return to the present moment more confidently, more empowered, and more available to others" (emphasis added). In a similar spirit, may we use prosocial synthetic media to create a kind of movie studio that benefits us. May we return from our synthetic experiences more confident, empowered, and available to contend with the reality of our lives. And may we be more deliberate and creative about the reality we co-create.

Given the threats posed by synthetic media, is it ever worth releasing a deepfake into the wild? But it's neither that deepfakes are inherently good or bad (technological determinism), nor that they're neutral and entirely dependent on what we do with them (social determinism). As philosopher of technology Langdon Winner articulates, most technologies hold potential for good and harm, and therefore require thoughtful stewardship.

To help us steward synthetic media towards prosocial ends, this artist statement attempts to conceptually organize the landscape of prosocial synthetic media — categorized into whether they primarily fulfill the social purpose of education, healing, or social change. This statement also highlights a ripe source of prosocial potential — for using synthetic media to envision and elicit the change we wish to see — and introduces the possibility that prosocial synthetic media might help alleviate our post-truth crisis. Finally, this statement is an invitation to members of the synthetic media community: to think about how you might organize the landscape, identify where you see untapped prosocial potential, and be ambitious about leveraging the power of synthetic media for prosocial purposes.

In his book The Road Home, Buddhist teacher Ethan Nichtern describes the meditative practice of visualization as creating a "mindfully creative space, a kind of movie studio that...benefits sentient beings." He notes that "all visualizations end by dissolving imagination and returning to naked awareness" because "the point of visualization is to return to the present moment more confidently, more empowered, and more available to others" (emphasis added). In a similar spirit, may we use prosocial synthetic media to create a kind of movie studio that benefits us. May we return from our synthetic experiences more confident, empowered, and available to contend with the reality of our lives. And may we be more deliberate and creative about the reality we co-create.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.